Hello. I'm DVM who is talk about deep learning for 5 minutes using keras.

Today I'm going to talk about Multinomial classification using mnist example

Let's classify digit numbers using mnist example.

wiki pedia saids Multinomial classification

is the problem of classifying instances into one of three or more classes.

I introduce 5 technique.

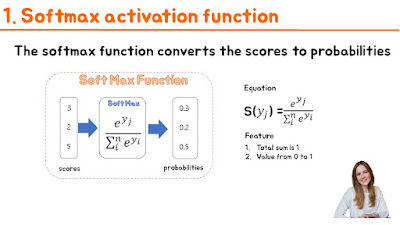

First, softmax activation funtion.

In order to classify three or more classes, we have to use softmax activation function.

Second, One hot encoding.

In order to classify three or more classes, It is better to use one hot encoding.

The largest value is represented by 1.

Third, Standardization.

If the datas are spread, normalization gather the datas.

Fourth, Cross entropy cost function.

In order to classify three or more classes,

we have to use cross entropy cost function and softmax activation function.

Fifth Stochastic gradient descent.

If learning rate is big, cost funtion become divergence

if learning rate is too small, cost function fall into local minimum which is not optimization.

we adjust learning rate untill cost values is small.

Let`s practice coding using keras

Let's define the library¶

In [3]:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation

from tensorflow.keras import utils

from tensorflow.keras.datasets import mnist

import numpy as np

import matplotlib.pyplot as plt

Let's define how many things you will classify¶

In [5]:

nb_classes = 10

Let's receive the train and test datas¶

In [16]:

(x_train,y_train),(x_test,y_test) = mnist.load_data()

Let's check the data using graph¶

In [9]:

plt.imshow(x_train[0].reshape(28,28),cmap='Greys')

Out[9]:

In [11]:

x_train.shape

Out[11]:

In [17]:

x_train = x_train.reshape(x_train.shape[0],

x_train.shape[1]*x_train.shape[2])

In [19]:

x_train.shape

Out[19]:

In [20]:

x_train.max()

Out[20]:

Let's normalize for learning well¶

Let's change integer to deciaml and normalization¶

In [22]:

x_train = x_train.astype('float32')/x_train.max()

In [23]:

y_train

Out[23]:

Let's make one-hot encoding¶

In [24]:

y_train = utils.to_categorical(y_train,nb_classes)

In [25]:

y_train

Out[25]:

Let's change the test datas like train datas¶

In [26]:

x_test = x_test.reshape(x_test.shape[0],

x_test.shape[1]*x_test.shape[2])

In [27]:

x_test = x_test.astype('float32')/255

In [30]:

y_test = utils.to_categorical(y_test,nb_classes)

Let's make 1 layer sequence model¶

Multinominal classification problem use softmax and cross entropy¶

In [33]:

model = Sequential()

model.add(Dense(nb_classes,input_dim=784))

model.add(Activation('softmax'))

In [34]:

model.summary()

Let's use categorical cross entropy¶

In [36]:

model.compile(loss ="categorical_crossentropy",

optimizer='sgd',metrics = ['accuracy'])

In [37]:

history = model.fit(x_train,y_train,epochs =15)

Let's evaluate¶

In [38]:

score = model.evaluate(x_test,y_test)

print(score)

Total Codes¶

In [ ]:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation

import numpy as np

from tensorflow.keras import utils

from tensorflow.keras.datasets import mnist

import matplotlib.pyplot as plt

nb_classes = 10

(x_train,y_train),(x_test,y_test) = mnist.load_data()

plt.imshow(x_train[0].reshape(28,28),cmap='Greys',interpolation='nearest')

x_train = x_train.reshape(x_train.shape[0],x_train.shape[1]

*x_train.shape[2])

x_train = x_train.astype('float32')/x_train.max()

y_train = utils.to_categorical(y_train,nb_classes)

x_test = x_test.reshape(x_test.shape[0],x_test.shape[1]*x_test.shape[2])

x_test = x_test.astype('float32')/255

y_test = utils.to_categorical(y_test,nb_classes)

model = Sequential()

model.add(Dense(nb_classes, input_dim=784))

model.add(Activation('softmax'))

model.compile(loss='categorical_crossentropy',optimizer='sgd',

metrics=['accuracy'])

history = model.fit(x_train,y_train,epochs=15)

score = model.evaluate(x_test,y_test)

print(score[1])

No comments:

Post a Comment