Hello. I'm DVM who talk about tensorflow certification Today, I will explain the basic Concept about Linear Regression.

If you haven't seen the previous lecture, I recommend that watch the previous lecture.

First. What's the Linear Regression? ->

Wikipedia said that In linear regression, the relationships are modeled using linear predictor functions whose unknown model parameters are estimated from the data.

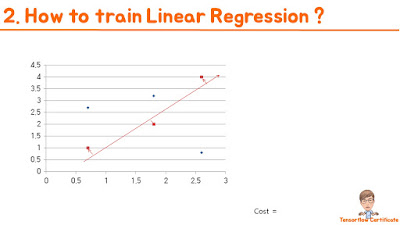

Second. How to train Linear Regression ? ->

The linear regression equation can be obatined by adjusting the weight and bias. The weight and bias are obtained by minimizing the difference a correct answer and the linear hypothesis equation.

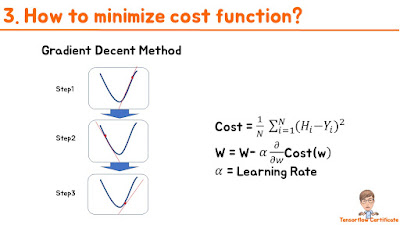

Third. How to minimize the cost function? Because the cost function is made by square of the error, it has a convex form.

Therefore, the minimum value is found by multiplying the differential value by the learning rate. This method is called gradient decendent.

If it is too large, It will not converge.

If it is too small, it will take a long time.

FIfth. Practice using tensorflow Let's make a Linear Regression using tensorflow Last lecture,

We build the simple tensorflow graph first. we make a operation using tensorflow constant which is node in the graph second. we launch the default graph using tensorflow.session third. Calculate the Operation using session.run

In this lecture, We use the tensorflow variable and gradient decent optimizer function. tensroflow variable is transiable variable. Its constructor requires an initial value for the variable. The initial value defines the type and shape of the variable and variables must be initialized by running tensorlfow global variable initializer. if we use tensorflow train gradient decent optimizer, we have to set learning rate. Because it is hyper parameter.

Let's make Linear Regression¶

In [0]:

import tensorflow as tf

Let's set the train variable¶

In [0]:

_x_train = [1.3,2.3,3.4]

_y_train = [1.1,2.2,3.3]

Let's set the tensorflow variable¶

In [0]:

_w = tf.Variable(tf.random_normal([1]),name='weight')

_b = tf.Variable(tf.random_normal([1]),name='bias')

model the Linear Function¶

In [0]:

_hypothesis = _x_train*_w+_b

Define the cost function¶

In [0]:

_cost = tf.reduce_mean(tf.square(_hypothesis - _y_train))

Use the gradient decent method and set the learning rate at 0.1¶

In [0]:

_optimizer = tf.train.GradientDescentOptimizer(learning_rate = 0.1)

_train = _optimizer.minimize(_cost)

Build the graph using session and operate using session.run¶

In [0]:

_sess = tf.Session()

_sess.run(tf.global_variables_initializer())

Let's try training of Linear Regression¶

In [18]:

for _step in range(3000):

_sess.run(_train)

if _step %200 == 0:

print(_step,_sess.run(_cost),_sess.run(_w),_sess.run(_b))

What's the optimal weight and bias?¶

In [19]:

_sess.run(_w)

Out[19]:

In [20]:

_sess.run(_b)

Out[20]:

Let's draw Linear Regression and Training data¶

In [25]:

import matplotlib.pyplot as plt

import numpy as np

_x = np.arange(0,4,0.01)

plt.plot(_x,_sess.run(_w)*_x+_sess.run(_b))

plt.plot(_x_train,_y_train,'*')

plt.grid()

= The relationships are modeled using linear predictor functions whose unknown model parameters are estimated from the data. x -> linear

Second. How to minimize the cost function in this video? ( ? ) method ->

gradient descent

Third. What is this that the developer has to decide for himself?

Third. It is called hyper parameter that the developer should decide such as learning rate.

Fourth, This code is right? No, variables must be initialized by running tensorlfow global variable initializer.

Thank you for watching this video. Please click the like and subscriber and I appreciate your feedback in comments.

No comments:

Post a Comment